I am not a developer, but I work with developer tools all day. This can be frustrating, because a lot of tools made for developers assuming knowledge that I'm lacking.

Of course, if that wasn't the case there would be less need for technical writers and my work would be harder to come by!

In any case, After spending the last few days migrating my job's documentation to use the CircleCI 2.0 configuration, here are some tips I picked up:

Non-interactive shells

This can be tricky if you don't keep it in mind. Because CircleCI tests are run in a non-interactive environment, your output can get ...funky. For example, here's the output of sculpin generate, which we use to turn our markdown files into html, running locally through CircleCI CLI:

Edit: While importing this blog from WordPress I discovered this image is missing. This blog post is over 5 years old, so I'm not gonna spend a lot of effort recreating it.

Here's the output from the build on CircleCI's platform:

Sculpin assumes an interactive shell in which it can rewrite its output as it goes. CircleCI's environment is set for better logging, not better human readbility (as it should be).

This is of course a trivial example, as I could add --quiet to my generate command to make my CI output nicer. But that's not always the case...

Rsync output on CircleCI

Part of my build process involves copying my compiled docs to a staging environment. Our scripts use rsync, the most common choice for transferring files from A to B.

The Problem

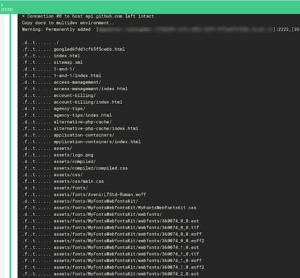

No matter what permutation of logging flags I gave rsync, the only output I could get from my CI builds was empty, or a list of every single file it was checking for changes:

Not a critical problem, but it certainly reduces the usefulness of the output.

The Solution

While I could pipe the rsync command directly through grep, that would interfere with the while loop I put rsync in, which checks for exit codes to determine success. Instead, I constructed my rsync command as follows:

rsync --checksum --delete-after -rtlzq --ipv4 --info=BACKUP,DEL --log-file=multidev-log.txt -e 'ssh -p 2222 -oStrictHostKeyChecking=no' output_prod/docs/ --temp-dir=../../tmp/ $normalize_branch.$STATIC_DOCS_UUID@appserver.$normalize_branch.$STATIC_DOCS_UUID.drush.in:files/docs/

That's a lot to unpack, I know. The key flags are -q, which keeps the output quiet, and --log-file=./multidev-log.txt, to redirect output into a log file. Then, once my loop has exited, I can run:

cat ./multidev-log.txt | egrep '<|>|deleting' || true

My pipe to egrep searches for the characters < or >, or the word deleting. Using the OR operator || true means that even if the grep returns no results the line will still exit with code 0, keeping my script from failing.

Now my output is concise and useful!

(By the way, I learned a lot of useful information about rsync output from this blog post, run through Google Translate.)

Dockerize for background processes

IMHO, this tool is poorly named. While it's purpose was originally for CI builds involving multiple docker containers (I assume), it's helpful even in a single container environment.

The Problem

I was seeing intermittent build failures coming from my Behat tests:

Behat is configured to look at port 8000 for my Sculpin server to serve the files it tests. The Sculpin step wasn't failing, but rather it wasn't starting up fast enough.

The Solution

My initial response to this problem was to add sleep 5 to my Behat step, giving Sculpin more time to initialize. But then my friend Ricardo told me about Dockerize. Because my Docker image is built from a CircleCI image, it was already installed. So I added a new step in .circleci/config.yml:

- run:

name: Start Sculpin

command: /documentation/bin/sculpin server

background: true

- run:

name: Wait for Sculpin

command: dockerize -wait tcp://localhost:8000 -timeout 1m

- run:

name: Behat

command: |

/documentation/bin/behat

Dockerize checks for a service listening on the port, and retries if one isn't found:

Now I have one less reason for my builds to fail...

Do you have any tips, tricks, or answers to common "Gotchas" for continuous integration testing? If so, please share them!